Somehow along the way data lakes got the rap that you can dump "anything" into them. I think this is carry over from the failed hippie free data love days of Hadoop and HDFS. No, a data lake is not a place you dump any kind of json, text, xml, log data...etc and just crawl it with some magic schema crawler then rinse and repeat. Sure you can take an approach of consume raw sources and then crawl them to catalog the structure. But this is a narrow case that you do NOT do in a thoughtless way. In many cases you don't need a crawler.

Now with most data lakes you do want to consume in data raw form (ELT it more or less) but this does not mean just dump anything. You still must have expectations on structure and data schema contracts with the source systems you integrate with including dealing with schema evolution and partition planning. Formats like Avro, Parquet and ORC are there to transform your data into normalized and ultimately well curated (and DQ-ed) data models. Just because you got a "raw" zone in your data lake does not mean your entire data lake is a dumping ground of data of any type or your data source structures can just change at random.

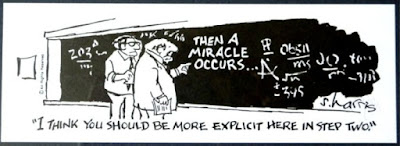

Miracles required? This is what most of today's strategic AI and even BI/Analytics engineering and planning looks like. If you don't have your data modeled well and your data orchestration modularized and under reins then achieving the promise of cost effective and maintainable ML models and self-service BI is a leap of faith at best. Forget about being a data-driven company if you are not yet a data-model-driven company yet.

A data lake is a modern DW built on highly scalable cloud storage and compute and based on open data formats and open federated query engines. You can't escape the need for well thought out and curated data models. Does not matter you are using Parquet and S3 vs Snowflake and Redshift. Data models are what make BI and Analytics function.

No comments:

Post a Comment